There’s Someone Else Here, and They Look Suspiciously Digital

Hey so, I don’t know if you all have noticed, but thinking doesn’t work the way it used to a few decades ago. There’s a lot of task offloading nowadays when it comes to thought, something that may have been unthinkable to our parents and their parents.

You write a message, you revise it, you take a suggestion that no human voice came up with, reject another you didn’t ask for, and hit send. Nothing unusual; just another day thinking and working in 2025.

We don’t remember agreeing to share our thinking with non-human systems. There was no meeting, no referendum, no awkward townhall where someone raised their hand to ask a clarifying question. And yet here we are.

So what’s going on?

The Moment Thinking Became a Group Project

We like to imagine ‘the thought process’ as something private, something internal. You with your coffee cup, stylishly alone with your thoughts; me staring at a wall for the last forty five minutes hard enough to make concrete uncomfortable. Point is, it’s all very me.

But we’ve been thinking with tools for centuries. Think about it: a notebook is a tool for recording your thoughts in an external bank, to be recalled whenever required. The same goes for everything from flowcharts and diagrams to other logic aides. All of these are thinking with the help of external tools.

Modern digital systems are also external thinking tools, they just stopped being as quiet. Whether it be remembering by checking a database, making decisions based on aggregates of ranking, even something as simple as choosing to not get a product based on a Google search, all are thoughts and decisions you would not have made if you didn’t have the extra processing power provided by the computer, and all are part of the cognitive process of a 21st century man.

Intelligence Was Never Solo to Begin With

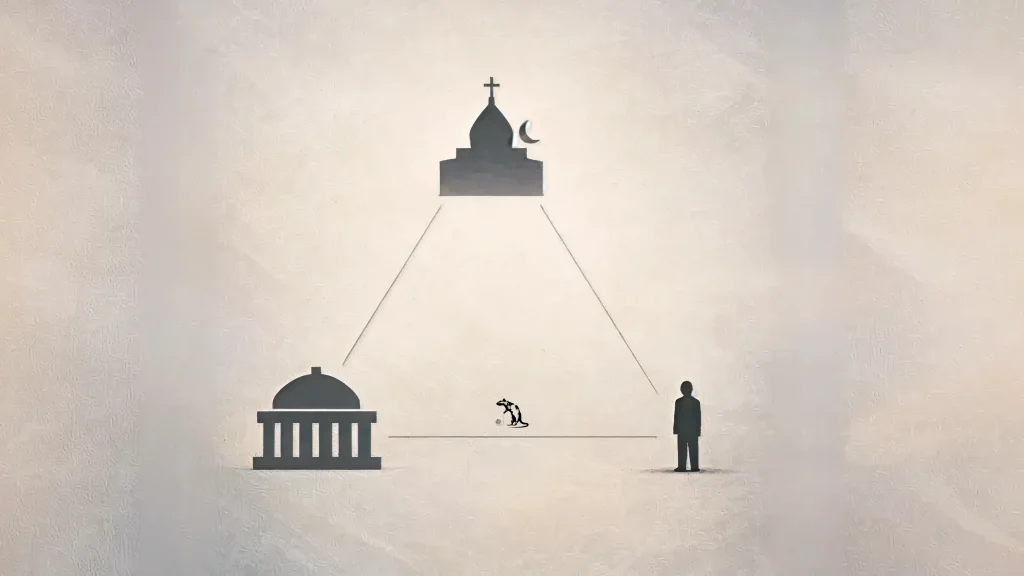

Expanding a bit more on that external help factor. Language, writing, institutions; human intelligence has always been scaffolded. The myth was independence, not collaboration.

Even before the digital explosion, human intelligence has never been self contained. Writing remembers what brains drop. Calendars decide what matters tomorrow. Filing systems preserve what would otherwise vanish. You can even argue that language itself is an expression of externalized thought.

What we often mistake for individual brilliance is usually just the visible portion of what is a much larger collective process; one that often includes tools, learned symbols, conventions, and shared memory. Intelligence has always leaned on external supports; we just stopped noticing them once they became familiar.

These weren’t threats to intelligence, they were aides. But something is different now.

Enter the Non-Human Mind

Call it what you will: AI, the algorithm, information processing, databases; it all amounts to the same thing. Modern digital systems are brains in our midst.

Digital interfaces don’t sleep, don’t care, and don’t belong, yet they participate. While that old folder with the final report only allows you to brainstorm what to do next year, the internet today says “Don’t think. Let me do that for you.” Digital systems are not just junior employees in the hierarchy of cognition, they’re decision makers.

Technically none of these above mentioned digital tools; search engines, algorithms or any others, have any intentions; they’re not alive after all. They don’t even understand meaning, if we are being honest.

And yet they influence what gets remembered, surfaced, ignored or forgotten.

Culture Forms Whether or Not You Want To

Once humans and digital systems collaborate at scale, norms emerge. Habits form. Expectations settle in. Congratulations: you have culture.

You see culture doesn’t require intention, it just needs repetition.

Once people rely on the same or similar systems to search, remember, prioritize and decide, patterns form. Certain ways of phrasing questions work better. People collectively start to think one way in a situation more often than any other. Certain things start to matter more, certain outcomes start to feel more natural.

No one announces these norms, that’s how culture works. They just occur naturally. They simply become the default.

But wait, are we talking about culture and digital-ity together?

Sociology Loading, Please Wait

Quick introduction in case you need it: Sociology is basically all of our understanding of how human beings interact with each other.

Most sociological frameworks assume societies are made of humans. Even when systems or institutions are discussed, agency is always assumed to lie with the humans in the system; thinking, choosing, intending, disregarding; all people things.

But what happens when the humans aren’t the only ones making and influencing decisions in a social system? Well, most social ideas kind of start to break.

In a system where digital mediation has agency, how do we define this arrangement? Is the system an actor? A structure? An environment? None of these categories really seem to fit comfortably. We have no clean language for systems where agency is distributed across biology and software.

This isn’t exactly a failure of the field of sociology, so much as a lag time. In a world where innovations happen by the hour, social reality has shifted faster than new research occurs to explain it. And when theory lags behind lived experience, confusion and assumptions fill the gap.

The Participation Problem

If a system meaningfully shapes decisions, does it count as part of the social process? And if it does, but can’t be held accountable, what do we do with that mismatch?

These questions sound very techno-dystopia, but suddenly become very real when you realize just how often outcomes are explained by pointing to the systems: “it was the top search result”, “it’s what all the top fashion influencers wear”. Sound familiar?

The problem isn’t technical, it’s more social. We’re trying to run a society using assumptions about participants that no longer fully apply. The discomfort people feel around digital systems has never been about their usefulness after all, it’s about their uncanniness.

How Responsibility Works When Agency Can be Digital

Participation historically has implied accountability. I don’t know about you, but I always got called to the Principal’s office even if I just was the one recording. If you shape outcomes in a system, generally you could always be questioned about them. You could justify your role, in a way.

Digital systems break that link disastrously. They influence decisions constantly, yet they cannot be held responsible in any concrete way. It’s just software after all. You might as well go yell at the clouds for it not being sunny enough.

When outcomes emerge from human–AI collaboration, blame and credit get blurry. “The system suggested it” is not the same as “no one decided”, but we treat it that way anyway.

Pointing to the system explains the process, but it doesn’t explain the choice. Treating it as if it does quietly erodes accountability without ever replacing it with anything else.

Society runs on responsibility. Fuzziness here doesn’t scale well.

Culture Without Intent

Coming back to culture for a bit. Culture usually emerges from shared meaning and negotiation. Participants argue about what’s useful, negotiate what isn’t. They reach a conclusion, and decide to collectively follow that conclusion. There you have it, a culture.

But what happens when one participant doesn’t understand meaning at all yet still shapes behavior? You get what we have today. Culture, with absolutely no defining logic behind it. Culture, without intent.

Norms form around systems that don’t even understand that they’re participating. Behavior adapts to patterns that no one explicitly chose. Who knows why that particular reel format became popular this month? And who cares? It’ll be gone by next week anyway, replaced by something just as pointless.

In a society where influencing participants don’t understand that they are influencing in the same way we understand it, we end up with a culture where things are happening pointlessly, structurally, illogically, but most importantly, cyclically; dystopian levels of cyclically.

Power, Without Anyone to Argue With

Traditional power was social. You could resist it, negotiate with it, or at least argue against it. Digital influence doesn’t seem to listen.

You can’t persuade a ranking system. You can’t appeal to a database. You can only adjust inputs, redesign processes, or adapt yourself. Resistance becomes indirect and often invisible. Argue with a wall and argue with the algorithm mean more or less the same thing.

This subtly changes how power operates. It’s less confrontational, more ambient. Less personal, more infrastructural. People still experience influence, but it’s harder to locate, harder to challenge, and harder to name. Power hasn’t disappeared. It’s just taken a form that doesn’t respond to the usual social tools.

For example, traditionally the biggest tool of resistance has been social exclusion. That doesn’t really work anymore. After all, you can’t really outcast a participant that is also the structural framework of social interaction itself.

What are you gonna do? Not use Google?

Social Trust in a Hybrid System

Trust used to flow between people and institutions. Now it also flows through tools.

These tools theoretically are neutral, and so they shouldn’t matter, but it doesn’t feel that way. Many people often mention how one or another digital system seems to be inclined either towards or against different social, philosophical, cultural or even political ideologies.

But then again, it’s just code, how could it have emotion? But it does, it at least feels something close enough to emotion to alter our world-views.

So where do trust mechanisms lie in between all this? Well, when influential actors become constant and sort of invisible, trust becomes harder to utilize. If you trust, are you trusting the tool, the system, the institution, the person behind it or the person working with it?

Trust thrives on clarity. These hybrid systems blur that clarity while increasing dependence. The result is a form of trust that works, seemingly efficiently, until something breaks, at which point it becomes unclear how trust should be repaired, and if it even valid to say it existed in the first place.

That ambiguity isn’t necessarily catastrophic, but it is deceptively fragile.

The Weird Intimacy of Thinking With Something Not Alive

Let’s be honest, thinking alongside digital systems is weird. It’s not friendship. It’s not obedience (in either direction). It’s not even really tool-use, at least not in the traditional sense.

It’s something in between.

These systems often learn information about ourselves that a lot of times even our own loved ones do not properly understand. And yet their functional mechanism often means it never feels as personal when we are transmitting very personal intimate secrets to them.

My doctor might not find out for weeks when I have a panic attack, but you can be damn well sure my algorithm does instantly.

We rarely talk about this intimacy, mostly because it doesn’t fit into existing social definitions. Naming it would require admitting how deeply it’s woven into our everyday thought. Normality, in this case, arrived faster than understanding.

A Question We’ll Have to Answer Soon

When culture includes non-human minds, we may need to redefine what social behavior even means.

Not because machines are becoming human, that might not ever happen (or at least not soon, hopefully), but because people are learning to think, talk and interact in ways that stretch our old definitions of participation, responsibility, power and trust to their breaking point.

The collective intelligence already exists. The society is already hybrid. Understanding just hasn’t caught up yet.

And historically speaking, that’s usually where the interesting part begins.